Reflections on Project Haiku: WebRTC

This is part of a series of posts I’m writing to put down my thoughts on the recently retired Mozilla Connected Devices Haiku project. We landed on a WebRTC-based implementation of a 1:1 communication device. For an overview of the project as a whole, see my earlier post.

This was one of those insights that seems obvious with hindsight. If you want to allow two people communicate privately and securely, using non-proprietary protocols, and have no need or interest in storing or mediating this communication - you want WebRTC.

For Project Haiku we had a list of reasons for not wanting to write a lot of server software. Mozilla takes user privacy seriously, and the best way to protect user data is to not collect it in the first place. We also wanted to minimize lock-in, and make the product easily portable to other providers. Cloud services convenience comes at a price: it can be hard to move once you start investing time into using a service. Our product aimed to facilitate communication between a grandparent and grandchild. We didn’t want to intrude into that by up-selling some premium service. There really wasn’t much the server would need to do if we did this right.

Connect us and go away

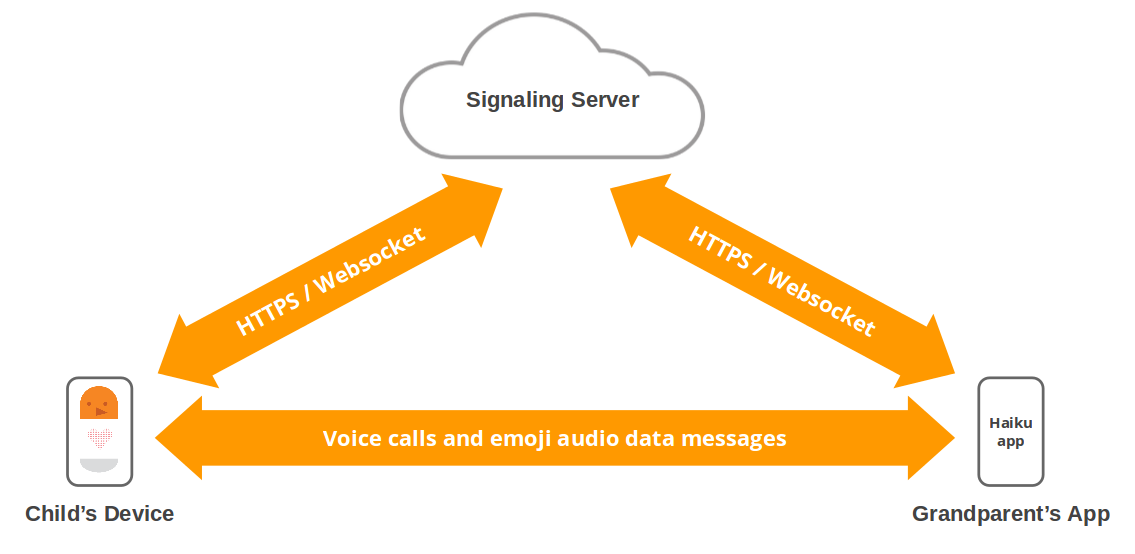

Here’s how it worked. Grandparent and grandchild want to talk more, so the grandparent (or parent) installs Device A in the child’s home and connects it to WiFi. Grandparent can either install the app or their own Device B. An invitation to connect/pair can be generated from either side, and sent to the other party. Once “paired” in this way, when both devices (peers) connect to the server, a secure channel is negotiated directly between the peers and the server’s work is done. Actual messages/data is sent directly between the peers.

STUN, TURN and making it work

On the server side, we need to authenticate each incoming connection and shuttle the negotiation of offers and capabilities between two clients. In WebRTC terminology, this broker is called a signalling server. We built a simple proof of concept using node.js and WebSocket. The other necessary components of this system are a STUN and TURN server - both well defined and with existing open source implementations. The complexities associated with WebRTC kick in with multi-party conferencing where different data streams might need to be composited together on the server or client or both. Then there’s the need for real-time transcoding and re-sampling of audio and video streams to fit the capabilities of the different clients wanting to connect, and the networks they are connecting over. And interfacing with traditional telephony stacks and networks. In this landscape, the very limited set of parameters needed for Haiku’s WebRTC use-case make the solution relatively simple - we just don’t need most of the things that bring along all that complexity.

The client and the catch

There is always a catch isn’t there? Almost all WebRTC client implementation effort to date has come from desktop browser vendors. Search the web and most of what you find about WebRTC assumes you are using a conventional browser like Firefox, Chome/Chromium etc. That’s no use for the Haiku device, where we are running in an embedded Linux environment, without the expected display or input methods and limited system capabilities. Existing standalone headless web clients (such as Curl, or node.js’ built-in HTTP modules) do not yet speak WebRTC. There is some useful work in the wrtc module that provides native bindings for WebRTC, provided you can compile for your architecture. We were able to use this to put together a simple proof of concept, running on Debian on a BeagleBone Black. wrtc gives you the PeerConnection and DataChannel but no audio/video media streams. It was enough for us to taste sweet prototype success: a headless single-board computer securely contacting and authenticating at our signaling server, and conducting a P2P, fully opaque exchange of messages with a remote client.

Going from this smoke test of the concept to a complete and robust implementation is definitely doable, but its not a trivial piece of work. Our user studies concluded that the asynchronous exchange of discrete messages was good for some scenarios, but the kids and grandparents also wanted to talk in real time. So to pick this back up means solving enough of the headless WebRTC client problem to enable audio streaming between devices. And with the added need to support a mobile app as client, likely transcoding audio too. Bug 156 on the wrtc module’s repo discusses some options.

What next?

Putting the Haiku project on hold has meant walking away from this. I hope others will arrive at the same conclusions and we’ll see WebRTC adoption expand beyond the browser. There are so many possibilities. Just stop for a moment and count the number of ways in which one device needs to talk securely to another using common protocols. Yet for reasons that suddenly seem unclear, this conversation is gated and channeled (and observed and logged) through a server in the cloud.

Both the desktop and mobile browser represent just one way to connect users to the Web. There are others, we should be looking into them. Although Mozilla exists to promote and protect the open web, it is historically a browser company. I can’t tell you the number of long conversations I’ve had with colleagues which end with “wait, you mean this isn’t happening in the browser?” Moving into IoT and Connected Devices means challenging this. We’ve set aside that challenge for now, I sincerely hope we’ll come back to it.