Emoji + Voice Prototype

Project Haiku Update

At Mozilla, I’m still working with a team on Project Haiku. Over the summer we had closed in on a wearable device used for setting and seeing friend’s status. It took a while for that to crystallize though and as we started the process of building an initial bluetooth-ed wearable prototype, our team was handed an ultimatum: Go faster or stop.

We combined efforts and ideas with another Mozilla team that had arrived at some very similar positions on how connected devices should meet human needs. As I write we are concluding a user study in which 10 pairs of grandparents and school-age grandchildren have been using a simple, dedicated communication device.

The premise was that these 2 groups want to interact more often - to be a small, more constant part of each others lives - and that this is impeded by needing a parent to schedule and facilitate voice/Skype/Facetime calls. What if they had a single-button, direct connection they could use without the parent’s help?

Prototyping for the User Study

We wanted to prove/disprove this need, and to explore the relative merits of synchronous (real-time) communication in the form of a voice call, alongside asynchronous messages in the form of simple emoji messages. We built a prototype using Firefox OS on Sony phones with a simple user interface: a button for each of the emoji we’d selected, and a call/pickup/hangup button. The devices were explicitly and exclusively connected: each device would only accept incoming calls and SMS from the other in the pair, and could only dial and send SMS to that one phone.

The User Experience

For the interface, we didn’t want to get hung up on custom hardware, so we gambled that we could implement a software UI on a touch-screen phone and not carry too much smart phone feature expectations and baggage into the user study. We also wanted a fixed/stationary object rather than a portable one - to give the grandparent/child a physical space and representation in the home. As sound output from the phone’s built-in speaker was lacking, we attached a USB-powered external speaker. This and the phone were housed in a 3d printed enclosure/stand, and the unit was supplied with plug-in USB power.

Pulling it Together

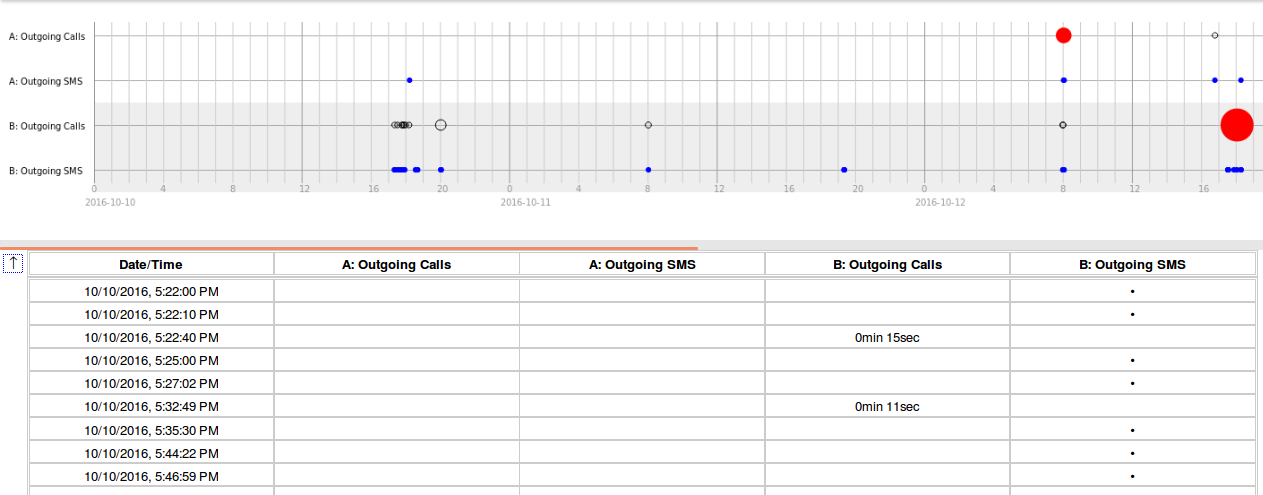

Each phone was fitted with a prepaid SIM, and configured with our custom software (a replacement system app) and the telephone number of the SIM in the paired device. This allowed us to side-step Wifi setup and troubleshooting and get voice calls and SMS (to carry our emoji messages) with very little development time. We were also able to use the usage (billing) reports from the carrier as a great source of data - indicating when SMS were sent, and both outgoing and incoming call time and duration. We wrote a python script to extract this data from the PDFs the carrier provided for download, and a selection of HTML/JS chart and reports to visualize the data. That code is on github, and we deployed to a Heroku node.js app to share the results with the team.

Did it work?

This study and the prototype was not without problems. We had only a couple of weeks to go from nothing to working devices in study participant’s hands. Audio quality and volume was an ongoing problem. Having decided to attach an external speaker, we then had to house it as one self-contained unit. I designed this enclosure and truthfully it was a scramble. We initially wanted an enclosure just for the phone - to hold it securely at an optimum angle. Adding the speaker - which was an irregular shape - was a bit of a challenge. I didn’t hit on the best/simplest way to bring all the parts together until afterwards. Also, I was not planning on being on-site for assembly and packing, so I was trying to make something with a minumum of parts and assembly steps. And, 20 of anything is quite a lot of 3d printing - it would have taken days to complete on my single printer, so we ended up sending files over to a company in the Bay Area who would get it all done and delivered to the office in time. Long story short, I flew down to the Mountain View office and with a little hacking and much teamwork we got all the units assembled, flashed, configured and shipped out in time.

I had the novel experience of being on-call for tech support as we kicked off the study. A couple of the units arrived with the speaker jack loose or damaged somehow, but we got most of them figured out (one unit sadly never really recovered and was a source of some frustration for that pair.) On the plus side it meant I got to talk to the participants - who we otherwise had no direct contact with. These conversations alone convinced me that we were onto something.

As the data came in, patterns started to emerge. We saw lots (and lots) of short (less than 45s) calls - which we deduced must have been missed calls. But a steady stream of emoji messages. Clearly, some participants were more engaged than others - we expected that and it was the reason we stretched to build 20 prototypes. I was intrigued to see apparent “emoji conversations”, as well as isolated messages that would get “answered” some hours later.

The feedback from the participant surveys confirms a few hunches: More choice of emojis! Missed calls suck and the device wasnt capable of making itself heard from another room which compounded this problem. Video/“Facetime” calls please! But underneath this, a consistent message: It was fun to have another way to communicate directly with grandchildren/grandparents. It wont change the world, but yes, it could work.